- Word

Shannon entropy

- Image

- Description

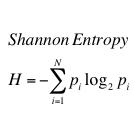

Shannon entropy, described by mathematician Claude Shannon, is a measurement of the unpredictability of a "message source" (or, equivalently, of an ensemble of messages). The message source can be any system that produces output, and whose output can be modeled by a random variable. Shannon entropy is typically measured in units of "average bits per message", and was shown by Shannon to be the minimum number of bits (on average) needed to encode a message from the given message source.

- Topics

- Entropy, Information Theory

- Difficulty

- 1